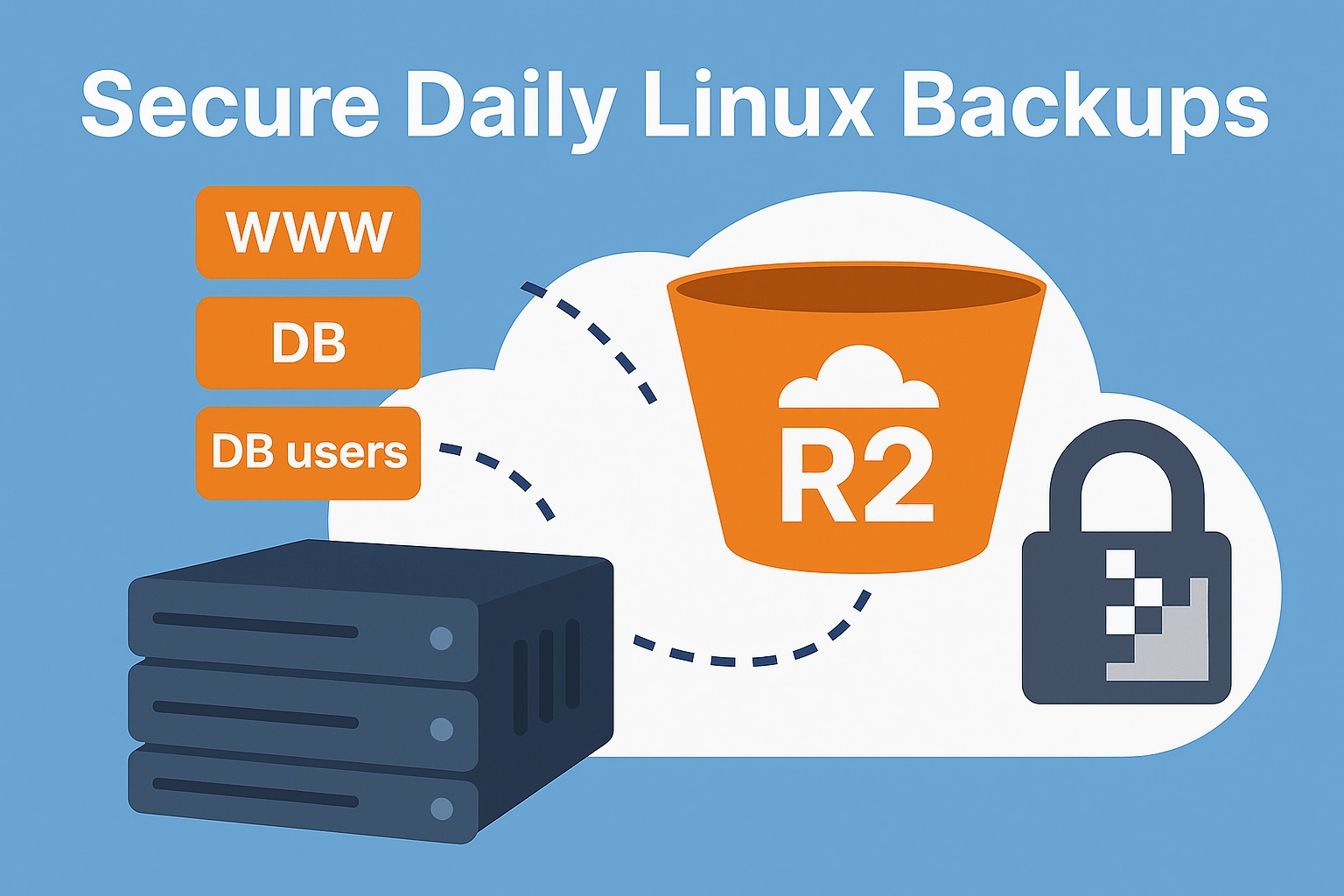

This guide walks you through setting up secure, automated daily backups of your Linux server’s websites and databases to Cloudflare R2 (S3-compatible storage), with a 14-day retention policy and Telegram notifications for failures. It’s designed for simplicity and security, ensuring you create everything the right way.

This guide is made for Debian-based systems like Ubuntu. If you use another package manager, adjust accordingly.

Note: Since writing this guide, I have then released S3Safe – a complete and more advanced open source script you can download and set up. S3Safe does everything described in this guide but comes with configurable components (WWW, DB, DB users), does a snapshot of what’s being backed up to avoid IO errors, retry logic to minimize failures, push notifications on errors, logging, advanced debugging modes, a dry-run mode that skips S3 uploading for testing purposes, and overall security improvements. You can still use this guide to learn how to generate PGP keys and set up Cloudflare R2.

Use Case

We want to back up our Linux server’s websites (in /var/www/) and MariaDB databases and users to Cloudflare R2 (S3-compatible storage) securely. Backups should run daily, be encrypted with PGP, and kept for 14 days. If a backup fails, we want a Telegram notification to alert us immediately.

Steps Overview

- Install dependencies on our Linux server.

- Create a Telegram bot for notifications.

- Generate PGP keys for encryption.

- Configure MariaDB credentials.

- Set up Cloudflare R2 storage.

- Create the backup script.

- Set up a cron job for daily backups.

- Test the backup and notification process.

- Configure Cloudflare R2 retention policy (14 days).

1. Install Dependencies

Update packages:

sudo apt updateInstall tools:

sudo apt install -y tar gnupg mariadb-client curl unzipInstall AWS CLI:

curl "https://awscli.amazonaws.com/awscli-exe-linux-x86_64.zip" -o "awscliv2.zip"

unzip awscliv2.zip

sudo ./aws/install

rm -f awscliv2.zip

rm -rf awsVerify:

tar --version

gpg --version

mariadb-dump --version

aws --versionWe will create the following folders in our home dir:

~/backup~/backup/logs~/scripts~/scripts/cronjobs~/keys

mkdir -p ~/backup/logs ~/scripts/cronjobs ~/keys

chmod 700 ~/backup ~/scripts ~/keys2. Create Telegram Bot

We will use a Telegram Bot to send us notifications if a backup fails. This is a easy, secure and free way to get push notifications. You can implement other push notification services or email if you prefer.

- In Telegram on your phone start a chat with

@BotFather. - Send

/start, then/newbot. - Username: ServerBot

- Copy the bot token (e.g., <your_bot_token>).

- Start a chat with your bot (

@ServerBot). - Send a message (e.g., “Hi”).

- Get chat ID:

- Open: https://api.telegram.org/bot<your_bot_token>/getUpdates.

- Find “chat”:{“id”:<your_chat_id> (e.g., <your_chat_id>).

3. Generate PGP Keys (Windows Desktop)

We will create a OpenPGP Key pair and use your public key on our server to encrypt our backups before we transfer them to Cloudflare. This way they are stored securely, should our account get compromised. We will store our private key securely, and use it, if we need to download and extract a backup. Don’t store the private key on your server!

- Install Gpg4win: Download from gpg4win.org.

- Open Kleopatra (included with Gpg4win).

- File > New Key Pair > Create a personal OpenPGP key pair.

- Name: Your name.

- Email: <[email protected]>.

- Passphrase: Set a strong one, and keep it in a password manager.

- Key Material: curve25519.

- Valid Until: Remove (never expire).

- Click Create.

- Export public key: Right-click key > Export > Save as public.asc.

- Export private key: Right-click key > Export Secret Keys > Save as private.asc (keep secure).

- Transfer

public.ascto server (e.g., via WinSCP to~/keys/).

4. Import Public Key

On your server, import the public key we just uploaded:

gpg --import ~/keys/public.ascVerify:

gpg --list-keysDelete file:

rm ~/keys/public.asc5. Configure MariaDB Credentials

Instead of hardcoding root username and password in our backup script, we will use a .my.cnf file to store these. If the file exists, MariaDB will automatically extract the credentials from this file.

Create ~/.my.cnf:

nano ~/.my.cnfAdd:

[client]

user = root

password = <your_mariadb_root_password>Save and exit.

Set permissions:

chmod 600 ~/.my.cnfTest it works:

mariadb -e "SHOW DATABASES;"6. Set Up Cloudflare R2

- Sign in to Cloudflare dashboard.

- In the left menu, go to “R2 Object Storage” and enable R2.

- In Overview click “Create bucket”

- Name: <your_bucket_name>.

- Location: Choose your region (e.g., EU for GDPR).

- Default storage class: Standard

- Create R2 API Token:

- Go back to R2 Object Storage > Overview > { }API > Manage API Tokens

- Create API Token

- Token Name: R2-Backup-Token

- Permission: Object Read & Write.

- Specify bucket(s) > Apply to specific buckets only: Select your bucket.

- TTL: Forever

- No IP restrictions.

- Save: Access Key ID, Secret Access Key, Endpoint URL (e.g., https://<account_id>.r2.cloudflarestorage.com).

- Configure AWS CLI:

aws configureEnter: Access Key ID, Secret Access Key, region: auto, output: json.

Test our endpoint:

aws s3 ls --endpoint-url <your_endpoint>7. Create Backup Script

Create script:

nano ~/scripts/cronjobs/backup-www-db.shAdd the following code and replace <your_bucket_name>, <your_account_id>, <[email protected]>, <your_bot_token>, and <your_chat_id> with your values:

#!/bin/bash

# Configuration

TIMESTAMP=$(date +%Y-%m-%d_%H-%M-%S)

BACKUP_DIR="$HOME/backup"

LOG_FILE="$BACKUP_DIR/logs/backup-$TIMESTAMP.log"

R2_BUCKET="<your_bucket_name>"

R2_ENDPOINT="https://<your_account_id>.r2.cloudflarestorage.com"

GPG_RECIPIENT="<[email protected]>"

TELEGRAM_BOT_TOKEN="<your_bot_token>"

TELEGRAM_CHAT_ID="<your_chat_id>"

STATUS="SUCCESS"

# Create log file

mkdir -p "$BACKUP_DIR/logs"

echo "Backup started: $TIMESTAMP" > "$LOG_FILE"

# Function to send Telegram notification and exit

send_notification_and_exit() {

curl -s -X POST "https://api.telegram.org/bot$TELEGRAM_BOT_TOKEN/sendMessage" -d chat_id="$TELEGRAM_CHAT_ID" -d text="Server Backup failed at $TIMESTAMP"

exit 1

}

# Backup Websites

for SITE in /var/www/*; do

if [ -d "$SITE" ]; then

SITE_NAME=$(basename "$SITE")

echo "Backing up website: $SITE_NAME" >> "$LOG_FILE"

if tar -czf "$BACKUP_DIR/www-$SITE_NAME.tar.gz" --warning=no-file-changed -C /var/www "$SITE_NAME"; then

if gpg --batch --encrypt --recipient "$GPG_RECIPIENT" --trust-model always "$BACKUP_DIR/www-$SITE_NAME.tar.gz"; then

if aws s3 cp "$BACKUP_DIR/www-$SITE_NAME.tar.gz.gpg" "s3://$R2_BUCKET/www/$SITE_NAME/$TIMESTAMP/www-$SITE_NAME.tar.gz.gpg" --endpoint-url "$R2_ENDPOINT" --checksum-algorithm CRC32; then

rm "$BACKUP_DIR/www-$SITE_NAME.tar.gz" "$BACKUP_DIR/www-$SITE_NAME.tar.gz.gpg"

echo "Website backed up: $SITE_NAME" >> "$LOG_FILE"

else

echo "Upload failed: $SITE_NAME" >> "$LOG_FILE"

STATUS="FAILED"

send_notification_and_exit

fi

else

echo "Encryption failed: $SITE_NAME" >> "$LOG_FILE"

STATUS="FAILED"

send_notification_and_exit

fi

else

echo "Website backup failed: $SITE_NAME" >> "$LOG_FILE"

STATUS="FAILED"

send_notification_and_exit

fi

fi

done

# Backup Databases

DBS=$(mariadb -e "SHOW DATABASES;" | grep -Ev "(Database|information_schema|mysql|performance_schema|sys|phpmyadmin)")

for DB in $DBS; do

echo "Backing up database: $DB" >> "$LOG_FILE"

if mariadb-dump --databases "$DB" > "$BACKUP_DIR/db-$DB.sql"; then

if (cd "$BACKUP_DIR" && tar -czf "db-$DB.tar.gz" --warning=no-file-changed "db-$DB.sql"); then

rm "$BACKUP_DIR/db-$DB.sql"

if gpg --batch --encrypt --recipient "$GPG_RECIPIENT" --trust-model always "$BACKUP_DIR/db-$DB.tar.gz"; then

if aws s3 cp "$BACKUP_DIR/db-$DB.tar.gz.gpg" "s3://$R2_BUCKET/db/$DB/$TIMESTAMP/db-$DB.tar.gz.gpg" --endpoint-url "$R2_ENDPOINT" --checksum-algorithm CRC32; then

rm "$BACKUP_DIR/db-$DB.tar.gz" "$BACKUP_DIR/db-$DB.tar.gz.gpg"

echo "Database backed up: $DB" >> "$LOG_FILE"

else

echo "Upload failed: $DB" >> "$LOG_FILE"

STATUS="FAILED"

send_notification_and_exit

fi

else

echo "Encryption failed: $DB" >> "$LOG_FILE"

STATUS="FAILED"

send_notification_and_exit

fi

else

echo "Compression failed: $DB" >> "$LOG_FILE"

STATUS="FAILED"

send_notification_and_exit

fi

else

echo "Database dump failed: $DB" >> "$LOG_FILE"

STATUS="FAILED"

send_notification_and_exit

fi

done

# Backup DB Users

echo "Backing up database users" >> "$LOG_FILE"

if mariadb -e "SELECT CONCAT('SHOW GRANTS FOR ''', user, '''@''', host, ''';') FROM mysql.user WHERE user NOT IN ('root', 'mysql.sys', 'mysql.session');" | tail -n +2 | while read -r grant; do

mariadb -e "$grant" >> "$BACKUP_DIR/db-users.sql"

done; then

if (cd "$BACKUP_DIR" && tar -czf "db-users.tar.gz" --warning=no-file-changed "db-users.sql"); then

rm "$BACKUP_DIR/db-users.sql"

if gpg --batch --encrypt --recipient "$GPG_RECIPIENT" --trust-model always "$BACKUP_DIR/db-users.tar.gz"; then

if aws s3 cp "$BACKUP_DIR/db-users.tar.gz.gpg" "s3://$R2_BUCKET/db-users/global/$TIMESTAMP/db-users.tar.gz.gpg" --endpoint-url "$R2_ENDPOINT" --checksum-algorithm CRC32; then

rm "$BACKUP_DIR/db-users.tar.gz" "$BACKUP_DIR/db-users.tar.gz.gpg"

echo "Database users backed up" >> "$LOG_FILE"

else

echo "Upload failed: db-users" >> "$LOG_FILE"

STATUS="FAILED"

send_notification_and_exit

fi

else

echo "Encryption failed: db-users" >> "$LOG_FILE"

STATUS="FAILED"

send_notification_and_exit

fi

else

echo "Compression failed: db-users" >> "$LOG_FILE"

STATUS="FAILED"

send_notification_and_exit

fi

else

echo "Database users dump failed" >> "$LOG_FILE"

STATUS="FAILED"

send_notification_and_exit

fi

echo "Backup completed: $TIMESTAMP" >> "$LOG_FILE"Save and exit.

Make the file executable:

chmod +x ~/scripts/cronjobs/backup-www-db.sh8. Set up Cron job

Edit crontab:

crontab -eWe will configure the backup to run every night at 3am:

0 3 * * * /home/<your_user>/scripts/cronjobs/backup-www-db.shReplace <your_user> with your username.

Save and exit.

Verify:

crontab -l9. Test Backup and Notification

Run script:

./backup-www-db.shCheck log:

cat ~/backup/logs/backup-*.logCheck R2:

aws s3 ls s3://<your_bucket_name>/ --endpoint-url <your_endpoint> --recursiveTest notification:

- Change GPG_RECIPIENT to [email protected] in

backup-www-db.sh. - Run the script again.

- Confirm Telegram message: “Server Backup failed at $TIMESTAMP”.

- Fix GPG_RECIPIENT back to the correct email.

- Download a backup from R2 (via Cloudflare dashboard).

- Decrypt (Kleopatra) and decompress (7-Zip) on Windows.

- Verify contents (e.g., SQL file).

10. Configure R2 Retention (14 Days)

Go to R2 Object Storage > <your_bucket_name> Settings > Object lifecycle rules > Add rule:

- Name: Delete-After-14-Days.

- Delete uploaded objects after: 14 days

- Rule status: Enabled

Save changes.

Conclusion

You now have a secure, automated backup system for your Linux server, storing encrypted backups in Cloudflare R2 with a 14-day retention policy. Telegram notifications alert you immediately if a backup fails.

You can monitor your R2 usage in R2 Object Storage > Overview, and check your spendings in Mange Account > Billing.