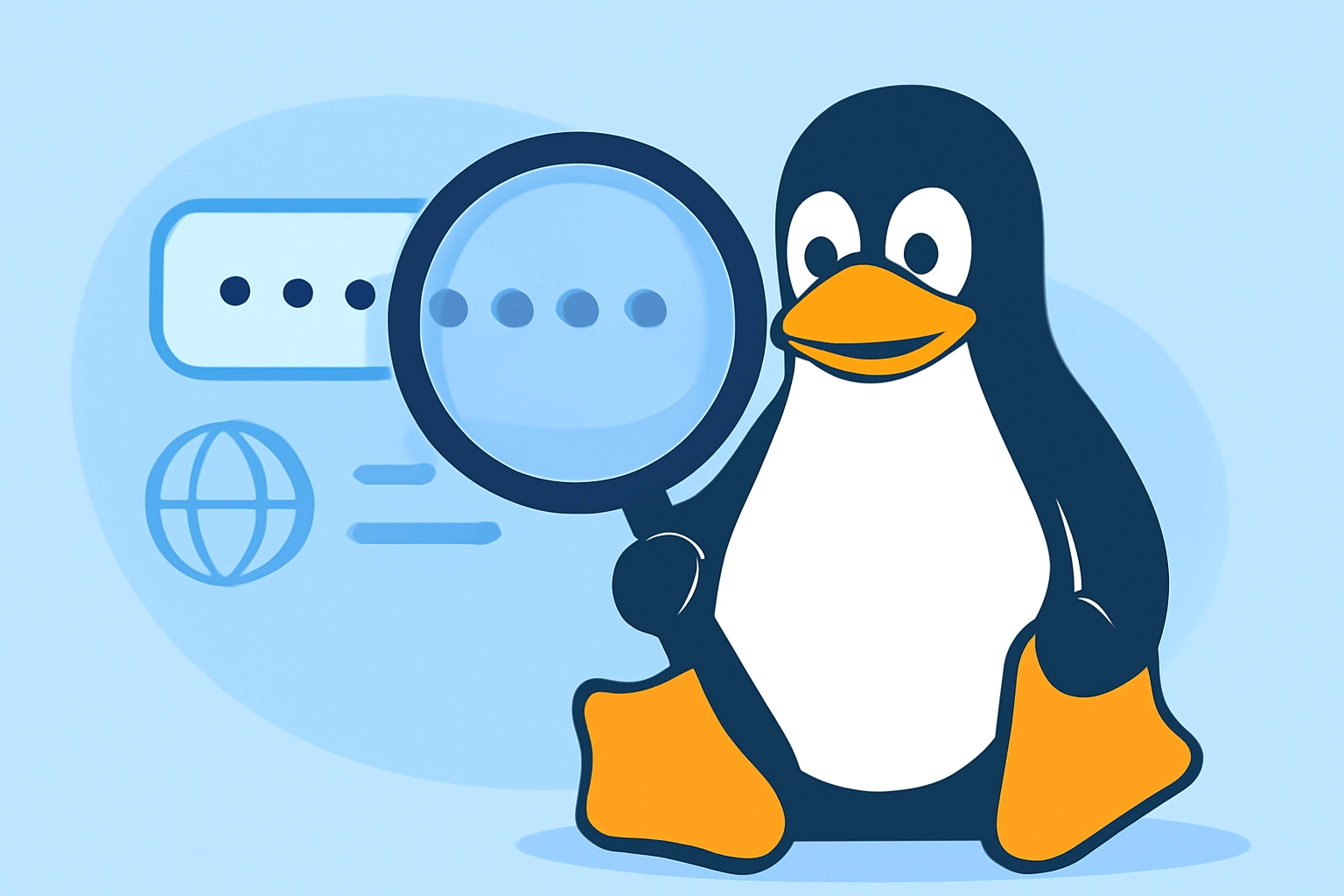

Nice WHOIS – Clean WHOIS Bash Script with Smart Fallback

Nice WHOIS is a Bash script that performs clean, color-formatted WHOIS lookups. It parses and deduplicates raw whois output, strips boilerplate legal notices, and falls back to RDAP or web lookups when standard whois is unavailable.